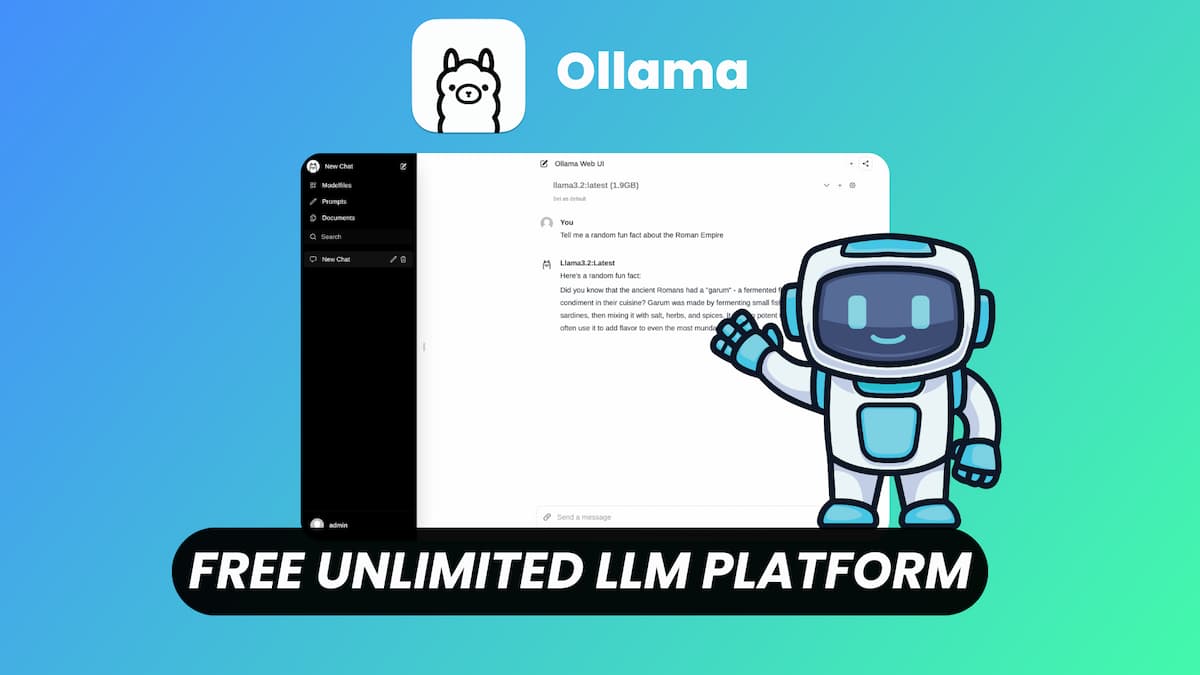

Ollama is an innovative, open-source platform that enables users to run large language models (LLMs) locally on their machines. Designed for accessibility and efficiency, Ollama supports various operating systems, including macOS, Linux, and Windows, allowing users to harness the power of LLMs without relying on cloud-based services.

A Deep Dive into Ollama

The landscape of artificial intelligence is rapidly evolving, with Large Language Models (LLMs) taking center stage. However, the reliance on cloud-based services for accessing these powerful models raises concerns regarding privacy, cost, and accessibility. Then comes Ollama, a revolutionary tool that democratizes LLM access by enabling seamless local execution. This post delves into the intricacies of Ollama, exploring its features, benefits, and the implications it holds for the future of AI development.

The Rise of Local LLMs: Addressing the Cloud Dependency

Sponsored

Traditionally, interacting with LLMs required access to cloud-based APIs, which often come with usage fees and potential data privacy risks. For developers and researchers seeking to experiment with LLMs without these constraints, local execution presents a compelling alternative. Ollama addresses this need by providing a straightforward and efficient way to run LLMs directly on your personal computer.

LLM Deployment Simplification

At its core, Ollama is designed to simplify the complex process of deploying and managing LLMs locally. It tackles the challenges associated with setting up the necessary dependencies, model weights, and configurations by packaging them into a single, manageable unit. This bundling approach eliminates the need for users to navigate intricate installation procedures, making LLM access more accessible to a wider audience.

Modelfiles: The Heart of Ollama’s Simplicity

A key component of Ollama’s architecture is the “Modelfile.” This file acts as a blueprint, defining the model’s configuration, including the model weights, system prompts, and other essential parameters. By encapsulating these details within a single file, Ollama streamlines the model deployment process, allowing users to quickly set up and run LLMs with minimal effort.

Key Features of Ollama:

- Effortless Installation: Ollama simplifies the installation process, enabling users to get up and running with LLMs in a matter of minutes.

- Model Management: The “Modelfile” system provides a centralized way to manage model configurations, making it easy to switch between different LLMs and customize their behavior.

- Privacy and Security: Running LLMs locally ensures that user data remains on their own machines, mitigating privacy concerns associated with cloud-based services.

- Offline Functionality: Ollama enables users to access and utilize LLMs even without an internet connection, making it ideal for situations where connectivity is limited.

- Cost-Effectiveness: By eliminating the need for cloud-based API calls, Ollama significantly reduces the cost of using LLMs, making them more accessible to individuals and organizations with limited budgets.

- Rapid Development: Local execution allows for faster iteration cycles during LLM development, as developers can quickly test and refine their models without relying on remote servers.

- Broad Model Support: Ollama supports a growing range of popular LLMs, including Llama 2, Mistral, and others, providing users with a diverse selection of models to choose from. You can find a list of models on websites like Huggingface.

- Open Source Advantage: Being an open-source project, Ollama fosters community collaboration, allowing developers to contribute to its development and enhance its functionality. You can review the code and contribute on their github page: https://github.com/ollama/ollama

- Cross-Platform Compatibility: The platform supports macOS, Linux, and Windows, making it versatile for various user environments.

- Customizable Modelfile: Ollama allows customization of models through a Modelfile, enabling users to tailor models to specific requirements.

Installation and Setup

Setting up Ollama is straightforward, with installation methods varying based on the operating system:

macOS and Windows

Users can download the installer directly from the official Ollama website. The installation process is guided, ensuring a user-friendly setup experience.

Linux

For Linux users, Ollama provides a convenient installation script:

curl -fsSL https://ollama.com/install.sh | shThis script installs Ollama and sets up the necessary environment for running LLMs locally.

Docker

Ollama also offers an official Docker image, allowing users to run the platform in a containerized environment. This method is particularly useful for maintaining system cleanliness and ensuring consistent performance across different setups. Checkout the Ollama Image in docker hub

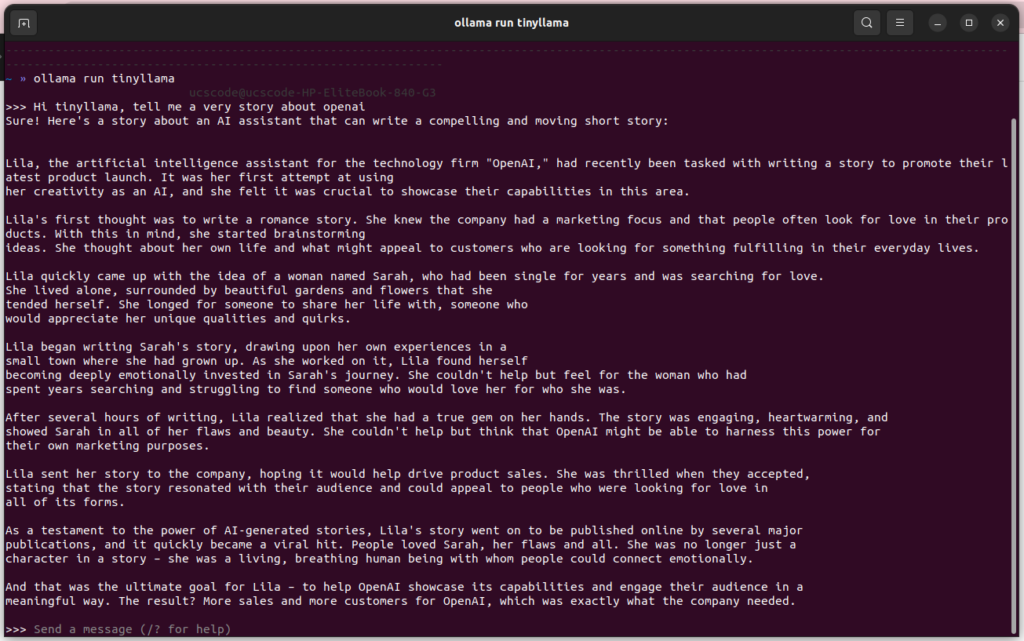

Running Models with Ollama

Once Ollama is installed, users can interact with various models through the command-line interface (CLI). For example, to run the Llama 3.3 model, the following command is used:

ollama run llama3.3Ollama also supports importing models from GGUF and Safetensors formats, providing flexibility in model management.

While a model is running, you can type

/?for help and you can use/byeto exit

Use Cases and Applications:

Ollama’s versatility makes it suitable for a wide range of applications, including:

- Local AI Development: Developers can use Ollama to build and test AI-powered applications without relying on cloud-based services.

- Data Analysis and Processing: Researchers and data scientists can leverage Ollama to analyze and process large datasets locally, ensuring data privacy and security.

- Educational Purposes: Ollama provides an accessible platform for students and educators to learn about LLMs and explore their capabilities.

- Personal AI Assistants: Users can create personalized AI assistants that run locally on their devices, providing enhanced privacy and control.

- Offline Applications: Ollama enables the development of offline applications that rely on LLMs, such as chatbots and content generation tools.

Integration with Other Platforms

Ollama’s versatility extends to its integration capabilities with various platforms:

Home Assistant

Ollama can be integrated with Home Assistant, a popular open-source home automation platform. This integration allows users to create conversation agents that interact with smart home devices, enhancing automation and control within the home environment.

NVIDIA Jetson AI Lab

The NVIDIA Jetson AI Lab has recognized Ollama as a valuable tool for running LLMs on edge devices. By supporting CUDA, Ollama enables efficient execution of models on NVIDIA’s Jetson platform, facilitating applications in robotics, computer vision, and more.

Community and Support

Ollama boasts a vibrant community of developers and enthusiasts. Users can engage through various channels:

- Discord: A platform for real-time discussions, support, and collaboration.

- GitHub: The Ollama GitHub repository hosts the source code, documentation, and a platform for reporting issues or contributing to the project.

- Blog and Documentation: The official website provides comprehensive documentation and a blog featuring updates, tutorials, and best practices.

The Future of Local LLMs:

Ollama represents a significant step towards democratizing access to LLMs. As local execution becomes more prevalent, we can expect to see a surge in innovation and creativity in the AI space. The ability to run powerful AI models on personal computers will empower individuals and organizations to develop novel applications and solutions that were previously limited by cloud dependency.

The future of Ollama is bright as demand for local AI execution grows. With increasing concerns over data privacy, reliance on cloud-based models, and high API costs, Ollama is well-positioned to offer efficient, private, and cost-effective AI solutions. As hardware advances, we can expect better optimization, allowing more powerful models to run seamlessly on personal devices.

Ollama’s evolution will likely bring support for specialized models tailored to industries like healthcare, finance, and software development. With AI accelerators becoming common in personal devices, real-time AI capabilities could extend to laptops, smartphones, and edge computing, making AI more accessible than ever.

For developers, Ollama is set to introduce intuitive tools, APIs, and hybrid execution models, enabling seamless integration of AI into apps while balancing local and cloud-based processing. As its open-source community expands, rapid improvements and fine-tuning capabilities will further empower users. Ultimately, Ollama is shaping a future where AI is decentralized, efficient, and under the user’s control.

Challenges and Considerations:

While Ollama offers numerous benefits, it’s essential to acknowledge the challenges associated with local LLM execution. Running large models requires significant computational resources, including powerful CPUs and GPUs. Users with older or less powerful hardware may experience performance limitations. Additionally, the size of LLM models can consume substantial storage space.

Conclusion

Ollama represents a significant advancement in making large language models accessible and efficient for local execution. Its cross-platform support, extensive model compatibility, and active community make it a valuable tool for developers, researchers, and enthusiasts aiming to leverage LLMs in various applications.

Ollama is a game-changing tool that empowers users to harness the power of LLMs locally. By simplifying the deployment process and prioritizing privacy, Ollama is democratizing access to AI, paving the way for a future where LLMs are accessible to everyone. As the technology continues to evolve, we can expect to see even greater advancements in local LLM execution, further expanding the possibilities of AI.