Imagine you’ve meticulously crafted a complex application, a symphony of interconnected services, each playing a vital role. You’ve carefully packaged each service into its own Docker container, creating isolated, self-contained units that function seamlessly. But as your application grows, orchestrating these containers across multiple machines becomes increasingly challenging. This is where Kubernetes enters the stage, elevating container management to an entirely new level.

While Docker provides a robust foundation for building and running applications in containers, Kubernetes takes it a step further, offering a comprehensive platform for managing and scaling containerized workloads at scale.

Let’s examine the unique benefits that Kubernetes offers over Docker, showing how it enables programmers to create, launch, and maintain cutting-edge applications with unmatched resilience and efficiency.

Orchestration Beyond Individual Containers:

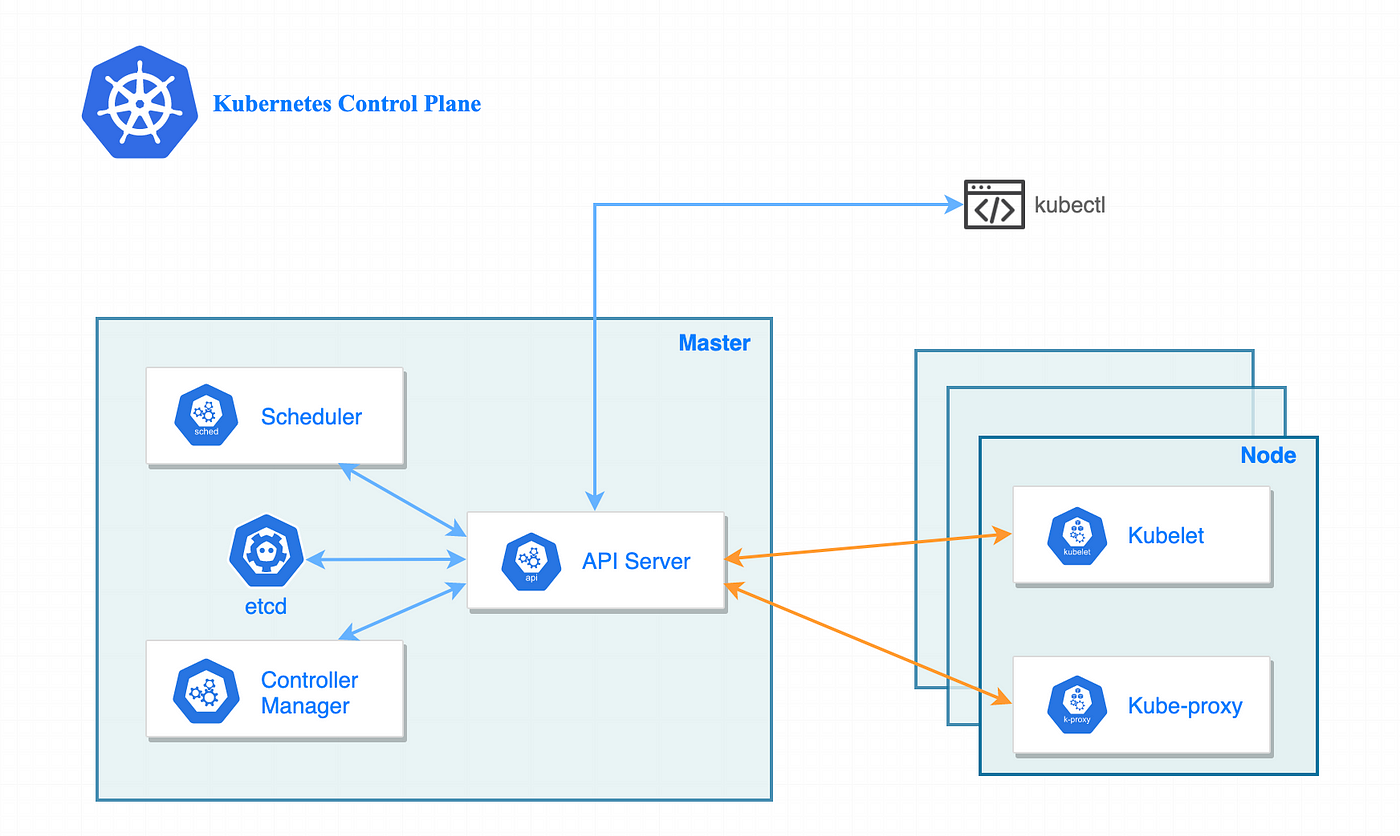

Docker excels in packaging individual applications into containers, providing a consistent environment for execution regardless of the underlying infrastructure. However, managing numerous containers across multiple hosts requires a sophisticated orchestration layer. This is where Kubernetes shines. It treats containerized applications as a cohesive whole, allowing you to define and manage their deployment, scaling, networking, and resource allocation with granular precision.

Imagine deploying an application with multiple interconnected services – a web server, a database, and a message queue. Docker would enable you to create containers for each service, but Kubernetes elevates this process by orchestrating the interactions between these containers. It ensures that all services are deployed on healthy nodes, automatically scales them based on demand, and handles communication between them seamlessly.

Automated Rolling Updates and Rollbacks:

Deploying updates to applications can be a delicate dance, potentially disrupting user experience if not executed flawlessly. Kubernetes simplifies this process with automated rolling updates. It progressively updates containers in a running application, ensuring that only a fraction of the workload is affected at any given time. If an update encounters issues, Kubernetes can seamlessly roll back to the previous version, minimizing downtime and potential service interruptions.

Self-Healing Capabilities:

Kubernetes possesses an inherent self-healing mechanism, continuously monitoring the health of your containers and infrastructure. If a container crashes or a node becomes unavailable, Kubernetes automatically restarts the affected containers on healthy nodes. This ensures high availability and fault tolerance, protecting your application from single points of failure.

Scalability On Demand:

As your application grows in popularity or faces unexpected surges in traffic, Kubernetes allows you to scale your resources dynamically. You can define scaling policies based on metrics such as CPU utilization or request rate. When demand increases, Kubernetes automatically provisions additional containers and distributes the workload across more nodes, ensuring optimal performance even during peak hours.

Resource Optimization:

Kubernetes meticulously manages resource allocation, ensuring that each container receives the necessary compute power, memory, and storage to function effectively. It also implements resource quotas and limits, preventing individual containers from monopolizing resources and impacting the overall system performance. This granular control over resource utilization optimizes your infrastructure and maximizes efficiency.

Service Discovery and Load Balancing:

Kubernetes simplifies service communication within a distributed application by providing a built-in service discovery mechanism. Each container registers itself with the Kubernetes cluster, making it easily accessible to other services. It also offers load balancing capabilities, distributing incoming traffic across multiple instances of a service to ensure even distribution and prevent overload.

Declarative Configuration:

Kubernetes relies on declarative configuration, allowing you to define your desired state rather than explicit instructions for achieving it. You specify the number of containers, their resources, networking requirements, and other parameters in a YAML file. Kubernetes then takes care of provisioning and managing the infrastructure to match this desired state. This declarative approach simplifies application management and reduces operational complexity.

Extensibility and Customization:

Kubernetes is an open-source platform with a vibrant community actively contributing to its development and customization. It offers numerous plugins, add-ons, and integrations, allowing you to tailor it to your specific needs. You can extend its functionality by creating custom controllers, operators, and deployments, adapting Kubernetes to unique workflows and requirements.

Conclusion:

While Docker excels in packaging applications into containers, Kubernetes elevates container orchestration to a new level. Its comprehensive features for deployment, scaling, networking, self-healing, and resource management empower developers to build resilient, scalable, and highly available applications. By embracing Kubernetes, you gain a powerful platform for managing the complexities of modern application development, enabling you to focus on building innovative solutions rather than wrestling with infrastructure intricacies.