Containers have revolutionized the way applications are developed, deployed, and managed, offering a lightweight and efficient alternative to traditional virtual machines. Docker, one of the most widely adopted containerization platforms, simplifies the process of packaging software into standardized units, ensuring consistency across different environments. This guide provides a structured approach to understanding Docker, from fundamental concepts to practical implementation.

Understanding Containers and Docker

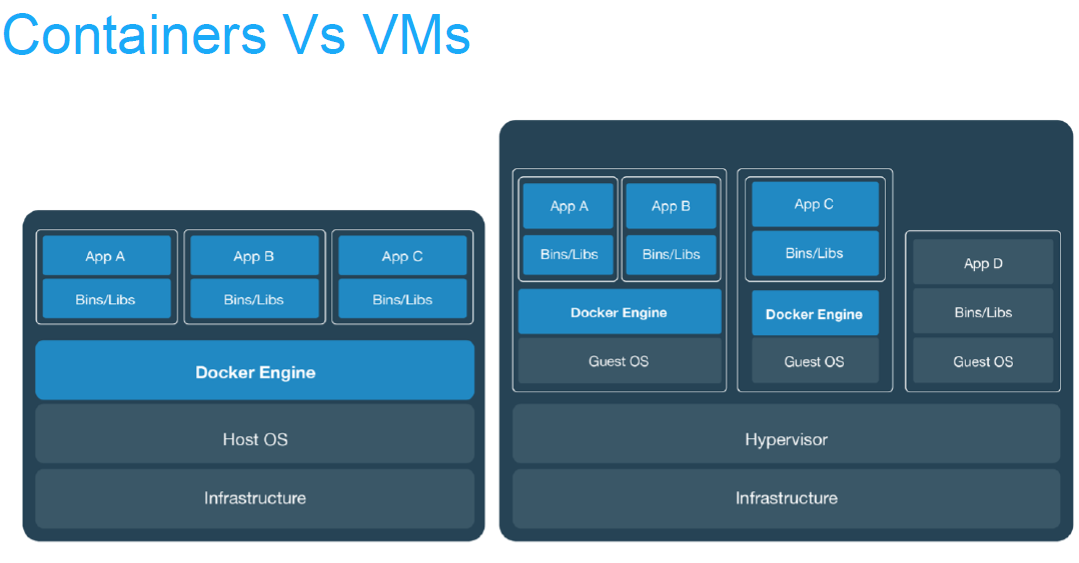

A container is an isolated environment that packages an application along with its dependencies, libraries, and configuration files. Unlike virtual machines, which require a full operating system for each instance, containers share the host OS kernel, making them faster and more resource-efficient.

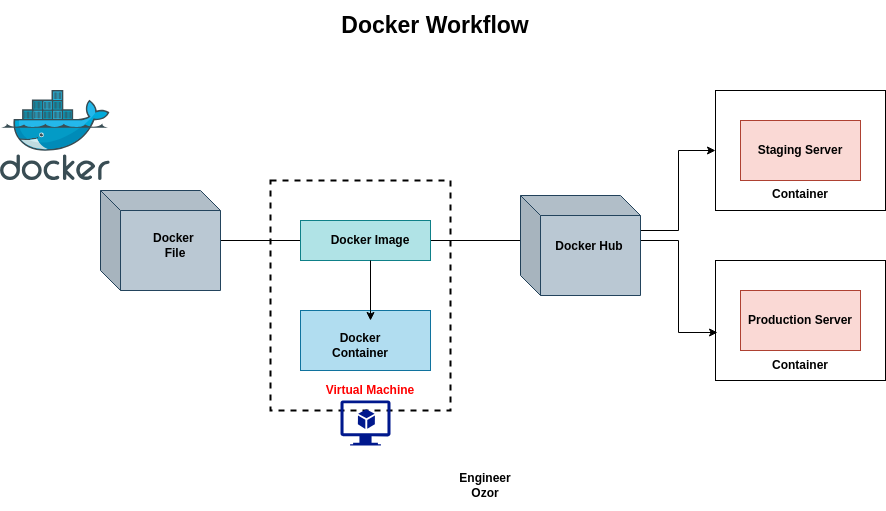

Docker automates the deployment of applications inside lightweight, portable containers. It provides tools to build, ship, and run applications seamlessly across various environments, from development to production. The key components of Docker include:

- Docker Engine: The runtime that builds and runs containers.

- Images: Immutable templates that define the application and its environment.

- Containers: Runnable instances of Docker images.

- Dockerfile: A text file with instructions for building an image.

- Docker Hub: A public registry for sharing and distributing Docker images.

Installing Docker

Before going deep into Docker, it’s essential to set it up on your system. Docker supports multiple operating systems, including Linux, macOS, and Windows.

On Linux

For Ubuntu/Debian-based systems, run the following commands:

# Add Docker's official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.asc

# Add the repository to Apt sources:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "${UBUNTU_CODENAME:-$VERSION_CODENAME}") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

Verify the installation with:

docker --version

On macOS/Windows

Docker Desktop provides a GUI-based installer for macOS and Windows. Download it from the official Docker website and follow the installation wizard.

Running Your First Container

Once Docker is installed, the next step is to run a simple container. The hello-world image is a traditional starting point:

docker run hello-world

This command pulls the hello-world image from Docker Hub (if not already cached) and executes it. The output confirms that Docker is working correctly.

If you try using docker on linux after first installation and it fails, write

groupson your terminal and press enter. Ifdockerdoes not show up in the list, then it probably is due to premission issue. To resolve it, you need to run the following command:

sudo usermod -aG docker $USER

Working with Docker Images

Docker images serve as blueprints for containers. They can be pulled from public registries like Docker Hub or built from a Dockerfile.

Pulling an Image

A Docker image is a read-only template that contains the application code, runtime, system tools, libraries, and dependencies needed to run an application. Think of it like a bunch of code wrapped inside a box, not like a .jpg file.

To download an image, use:

docker pull nginx

This fetches the latest nginx image (codebase), which can then be run as a container:

docker run -d -p 8080:80 --name mynginx nginx

Here:

-druns the container in detached (background) mode,-pmaps port 8080 on the host to port 80 in the container, and--nameassigns a custom name.

Building a Custom Image

A custom Docker image is a user-defined Docker image built from a base image or scratch, containing specific applications, dependencies, and configurations tailored to a particular project or task. It allows users to encapsulate their software and its environment into a portable, reproducible package for consistent deployment across different environments

A Dockerfile defines how an image is constructed. Below is an example for a simple Python app:

# Download and utilize a python3 docker image

FROM python:3.9-slim

# Create a directory inside the docker container named "/app" if not exist

WORKDIR /app

# Copy all content from the host machine into the container

COPY . /app

# Install a python package inside the container

RUN pip install flask

# Make the python endpoint accessible through port 5000

EXPOSE 5000

# Start the python script

CMD ["python", "app.py"]

To build the image:

docker build -t my-python-app .

Running the container follows the same pattern as before:

docker run -p 5000:5000 my-python-app

Managing Containers

Docker provides several commands to inspect and manage containers:

- List running containers:

docker ps - List all containers (including stopped ones):

docker ps -a - Stop a container:

docker stop <container_id> - Remove a container:

docker rm <container_id> - View logs:

docker logs <container_id>

For more advanced operations, Docker Compose allows defining multi-container applications using a YAML file.

Networking and Storage in Docker

A allows containers to communicate with each other and with the outside world, while also providing isolation between groups of containers. You can think of it like bluetooth which allows your phone and an external audio speaker to communicate.

Containers can communicate with each other through Docker networks. By default, Docker creates a bridge network where containers can interact using their assigned IPs.

To create a custom network:

docker network create my-network

Then, run containers within this network:

docker run --network=my-network --name container1 nginx

docker run --network=my-network --name container2 alpine

With this, nginx and alpine can send signals to each other because the run on the same network.

Docker Volume

Persistent storage is achieved using Docker volumes. Instead of storing data inside the container (which is ephemeral), volumes allow data to persist beyond container restarts. Think of volume like an external usb drive; you can store information into it but if you remove or damage the usb drive, all your data store is gone.

Create a volume:

docker volume create my-volume

Mount it to a container:

docker run -v my-volume:/data alpine

Best Practices for Docker

To optimize Docker usage, consider the following:

- Keep Images Small: Use lightweight base images (e.g.,

alpinevariants). - Leverage Layers Efficiently: Group related commands in the

Dockerfileto minimize layers. - Use

.dockerignore: Exclude unnecessary files from the build context. - Secure Images: Avoid running containers as root and scan images for vulnerabilities using tools like Snyk.

- Monitor Containers: Utilize tools like Prometheus and Grafana for observability.

Common Challenges and Troubleshooting

Beginners often encounter issues like port conflicts, permission errors, or unexpected container exits. The docker logs and docker inspect commands help diagnose problems.

If a container crashes, restart policies (--restart unless-stopped) can ensure automatic recovery. For dependency issues, ensure all required services are linked correctly in Docker Compose.

Next Steps

After mastering the basics, you can move on to orchestration tools like Kubernetes or Docker Swarm for managing clusters of containers. Continuous integration pipelines (CI/CD) can also integrate Docker for automated deployments.

Docker’s ecosystem is vast, with constant updates and community contributions. Staying updated through official Docker documentation and forums ensures proficiency in modern containerization techniques.