In an era where artificial intelligence (AI) is transforming industries and personal projects alike, the ability to run powerful language models like GPT (Generative Pre-trained Transformer) on your own computer offers unparalleled advantages. Not only does it provide cost savings, but it also ensures data privacy and allows for customization to suit specific needs. This guide will walk you through the process of setting up and running GPT models offline on a Linux system, with the use of 3 advance tools; Docker, Ollama and Open WebUI.

With this, you can even run your own ChatCPT-like platform online and join the competitive market of Generative AI text base model.

Why Run GPT Models Locally?

Sponsored

Before diving into the technical setup, it’s essential to understand the benefits of running GPT models on your local machine:

- Cost Efficiency: Utilizing cloud-based AI services often incurs significant costs, especially with extensive usage. Running models locally eliminates these expenses. What does this mean? Using AI for free!

- Data Privacy: Keeping your data on local hardware ensures that sensitive information remains confidential, a critical factor for many businesses and individuals.

- Customization: Local deployment allows for fine-tuning models to better suit specific applications, providing greater control over their behavior.

- Offline Accessibility: Operating without an internet connection ensures uninterrupted access to AI capabilities, essential for remote or secure environments. No more glitches, delay or messages like “Server is busy“. Your model is fully functional offline and amazingly fast.

Introducing Ollama and Open WebUI

To facilitate the local deployment of GPT models, it’s essential to understand the primary tools we’d be using:

Ollama

Ollama is an open-source framework that enables users to run large language models (LLMs) directly on their local systems. It offers a straightforward API for creating, running, and managing these models, simplifying the complexities involved in deploying AI locally. Ollama supports various LLMs, including Llama 3.1, Mistral, and others, making it a versatile choice for different applications. Learn more about ollama

Open WebUI

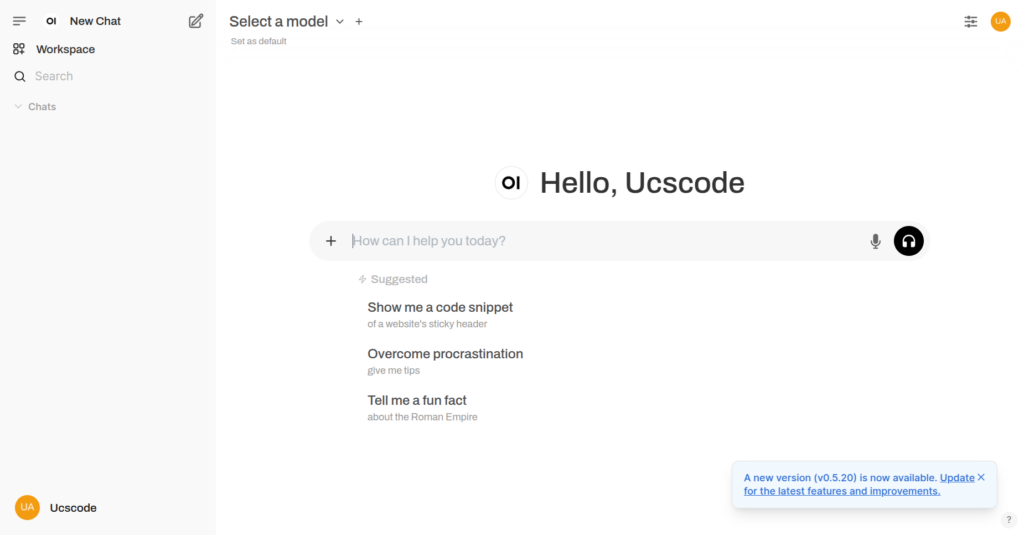

Open WebUI is a user-friendly, self-hosted web interface designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs, providing an intuitive platform for interacting with AI models. With features like model selection, conversation history, and code formatting, Open WebUI enhances the user experience when working with LLMs.

Prerequisites

Before proceeding, ensure your Linux system meets the following requirements:

- Operating System: A recent version of Linux (Ubuntu 22.04 is recommended).

- Hardware: A multi-core processor and at least 8GB of RAM (Minimum of 16GB Ram is recommended). More powerful hardware will improve performance, especially with larger models.

- Docker: Ensure Docker is installed and running on your system.

Step-by-Step Installation Guide

1. Install Ollama

Ollama simplifies the process of running LLMs locally. Visit the Ollama Download Page to download the ollama binary for your Linux distribution. After installation, confirm that Ollama is installed correctly by running:

ollama --versionYou should see the installed version number displayed. To start the Ollama service, run the following command:

ollama serveIf Ollama is already running, you’ll get a message like

Error: listen tcp 127.0.0.1:11434: bind: address already in useFor more Ollama options, run the following comand:

ollama --helpThis will list all command options that can be used with Ollama

2. Installing Open WebUI (Using Docker)

Open WebUI to provide a graphical interface (similar to ChatGPT’s) for interacting with your models. You can install Open WebUI using pip (python) and a bit of configuration. But for a straight forward process, we’ll be using docker.

Run the command below to start the Open WebUI container.

docker run -d --network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434 --name open-webui --restart always ghcr.io/open-webui/open-webui:mainNote: The above uses command from Open WebUI troubleshooting due to the default binding method 3000:8080 being unable to connect.

For other installation procedures, checkout the Open WebUI Github Repository

The command runs Open WebUI container but shares the host’s network stack allowing it to be accessed directly on http://127.0.0.1:8080

To stop the Open WebUI container, run the following command:

docker stop open-webui && docker rm open-webui

At this point in time, you have a functional Open WebUI that is ready to simplify conversation and a running Ollama server that is ready to process and generate text. But there’s just one thing missing, a GPT Model.

In order for Open WebUI + Ollama to work, you need to install and use a model. GPT Models can be quite large in size and resource intensive, but for the purpose of this guide, we will start with one of the most lightweight, yet effecient model that we can use.

Tinyllama

TinyLlama is a highly optimized, lightweight large language model (LLM) designed to run efficiently on resource-constrained hardware, such as CPUs and lower-end or no GPUs. It is a scaled-down version of larger models like LLaMA (developed by Meta), making it ideal for running AI models locally without requiring powerful computing resources.

Installing TinyLlama with Ollama

You can install TinyLlama locally using Ollama by pulling the model. Run the command below to pull the model:

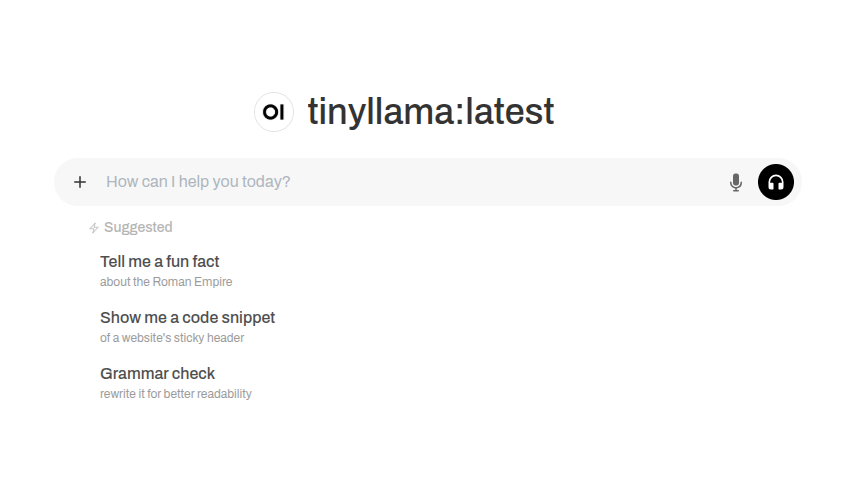

ollama pull tinyllamaOnce the tinyllama model has been pulled by Ollama, you can being to use your own ChatGPT TinyllamaGPT platform offline that works seemlessly without internet connection. To do this, click “Select a model” on the Open WebUI dashboard and select the tinyllama model

You can also search for it directly on the Open WebUI dashboard by writing the full model name “tinyllama” and clicking “Pull … from Ollama.com” to download the model and use instantly.

If you need a larger model and have the computer power to manage it with Nvidia GPU, you can install GPT-4, Llama or any other larger model which you can find here

Tip: visit

127.0.0.1:8080/adminfrom the Open WebUI to url to access the admin panel

Congratulation: You’ve successfully installed

Running GPT models locally with Ollama and Open WebUI provides control, privacy, and cost savings. With this guide, you can:

- Install and configure Ollama & Open WebUI

- Run LLMs on Linux without internet dependency

- Optimize AI performance for your hardware

- Explore real-world applications